Hey,

I attempted to make this extension myself, but with my limited knowledge of scripting, i unfortunately had problems with updating my database and pulling from it to create a search. So i figured I’d post it here in hopes that you guys could implement it and have better luck than I did. I’ll explain the layout and how i attempted to script it for how it should work to give you a good basis to go off of.

This is to better link communities and viewers, and allow people to find exactly the stream they’re looking for regardless of stream size or what game their playing. We’ll first start out with the broadcasters side of this feature.

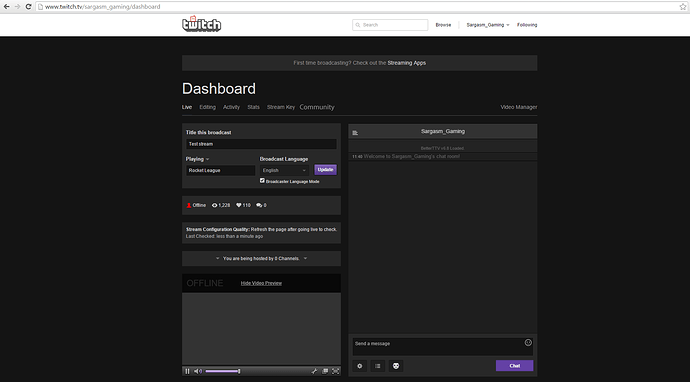

Here we have the basic dashboard. I’ve injected a “Community” button in the [div] id=dash_nav [/div] section of the dashboard. Keeps the dashboard looking clean and uncluttered for when its not being used.

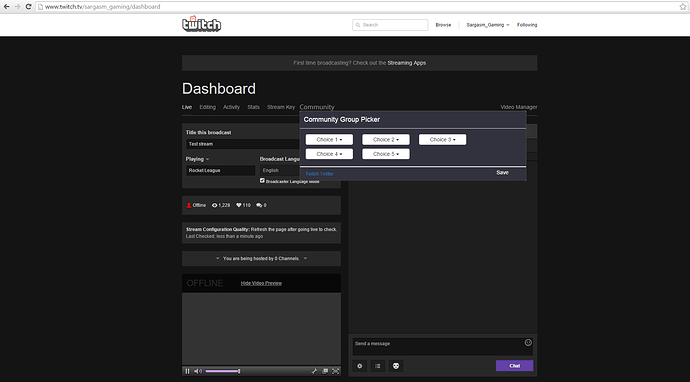

If clicked on, then it opens up the selection portion

As you can see, theres 5 dropdown menus, and a save button. The reason i chose 5 as the number of dropdowns, is so that broadcasters cant choose every single community even if they dont fit in it, just in order to get as much exposure as possible. By limiting it to 5, they have to choose ones that they actually fit into and best suits their stream.

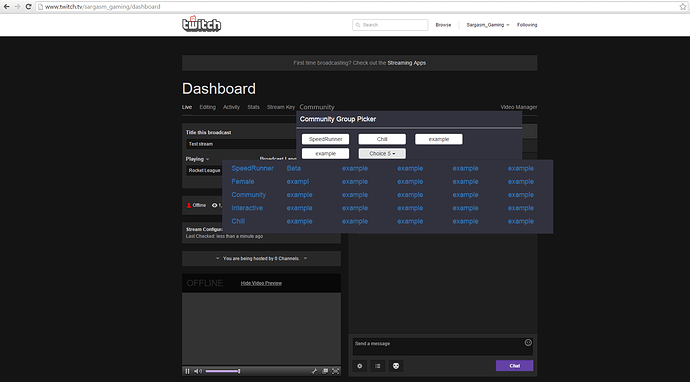

Upon clicking one of them, a dropdown appears giving the possible communities to select from. I chose this route instead of allowing people to make their own groups is to keep the database clean and so that its easy to keep everyone grouped together for easy searches. Also it has the added benefit of not allowing people to add any…not so appropriate groups/tags.

Upon selecting the 5 they want, they can click the save button to update the database.

How i was scripting it was i created a var = broadcaster, which pulled and trimmed the url to just the name of the broadcaster. Also a var for each of the choices. As you can see in picture 3, whichever choice was selected updated the text of the button. I used this text as a variable to point to which form in the database should be updated. Each form in the database is only a single list of names. The database would consist of multiple forms. A form for each of the possible communities to select from. A form for speedrunners with a list of names etc etc.

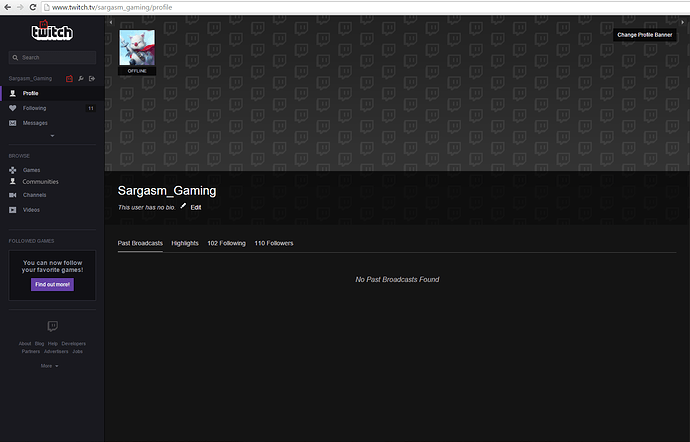

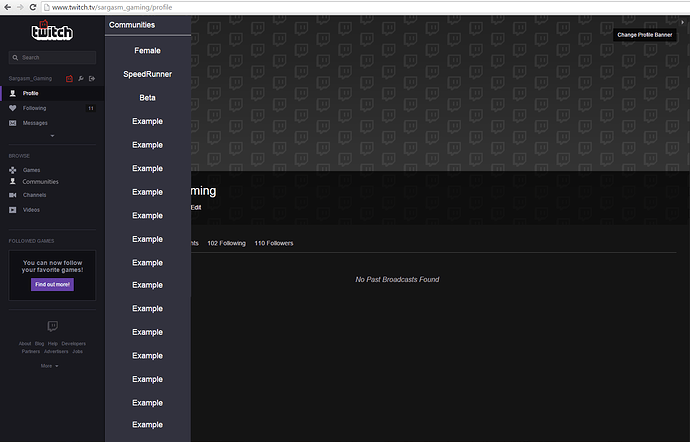

Now lets get to the viewers side of things. First i injected a button into the left sidebar under games. As usual, trying to keep it clean looking and professional.

Upon clicking it, it opens a single scrollable list of the communities. These are buttons and nothing more that will open a modal.

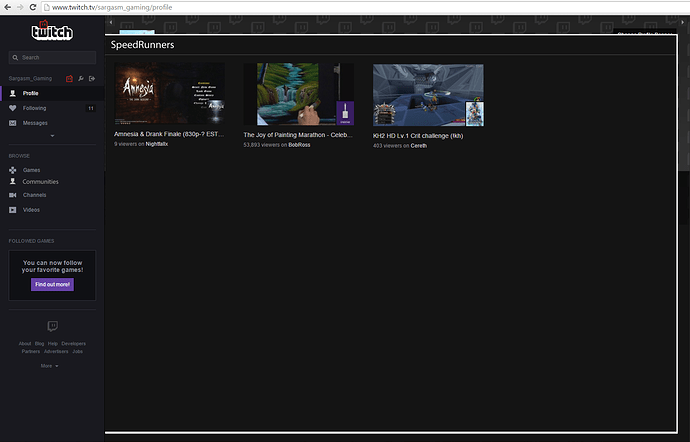

If a user wants to find a new speedrunner, regardless of game or size, they can choose speedrunner. Did you know diablo 2 is a game that has a good sized speedrunning community? Click that button and lets see what streamers are speedrunning.

Ok so this is where i ran into problems and may or may not be photoshopped Kappa Also I have noone that is speedrunning any games atm >.< well bob ross is kinda speedrunning some paintings MiniK But lets just assume this list is populated with only streamers that are speedrunning.

So how i attempted and failed at scripting this portion, was by doing a repeated call function. Because our datatbase forms are only single string names, The community chosen in the second pic, would point to which form in our database to pull from, and have a function repeatedly go through twitchs GET api function using each name as a string to populate the modal.

Thanks for the read if you made it this far,

Sargasm